Generative Model Basics (Character-Level) - Unconventional Neural Networks in Python and Tensorflow p.1

Hello and welcome to a series where we will just be playing around with neural networks. The idea here is to poke around with various neural networks, doing unconventional things with them. Doing things like trying to teach a sequence to sequence model math, doing classification with a generative model, and so on. I've wanted to do this in some tutorials, but haven't thought of a way to compile them, this will have to do!

To begin with this series, you should have a foundation of Machine Learning and Deep Learning with TensorFlow specifically. If you do not, you're going to probably have a harder time following along, but you could still probably do it.

At the very least, right now, you will need TensorFlow installed, and Python of course! I am currently using:

Python 3.6

TensorFlow 1.7

If you want to follow along on the CPU, you may have trouble with long training times, but you can still do it with a pip install --upgrade tensorflow. If you plan to follow along with TensorFlow on the GPU, then you will also need the to install the Cuda Toolkit and the matching CuDNN. See the TensorFlow install page for which version of CuDNN and the Cuda Toolkit you need. For installing the GPU version of TensorFlow, you can see my TensorFlow-GPU Windows installation tutorial or the Linux Tensorflow-GPU setup tutorial, both of which are with older version of TensorFlow, but the steps are the same (Get TF, CUDA Toolkit, and copy over the CuDNN files).

Okay, enough on that, let's play! I've personally always really liked generative models. They are relatively quick to train, requiring very little data, but can produce results very similar to the input you fed them. They don't appear to have much practical use as of yet, but you can do fun things with them, like making art and such. Since this series is a little less practical and a little more for fun, let's do it! I'd like us to start with what's known as a Character-Level Generative Neural Network. The "character" part of this just means it'll generate new sequences by using individual characters. In most cases, this is for language like tasks, but this doesn't HAVE to be the case. Let's first check it out with a real language, however. The most simple-to-use implementation that I've seen for a character-level generative model in TensorFlow is the char-rnn-tensorflow project on GitHub from Sherjil Ozair. Just in case things have changed and you want to follow along exactly, the exact commit I am working with is: 401ebfd Go ahead and Grab/clone this package, extract if necessary, and let's see what we've got here.

We've got a data directory, inside of which we have tinyshakespeare, which houses input.txt. Opening this, we can see it's written like you'd expect a play to be written, with structure like:

NAME: thing person says NEXT NAME... and so on

So this is a bit more than just some English text, it's also got a bit of structure to it. The goal of a generative algorithm is to reproduce something *like* what it was trained on. Let's see how that goes with this sample data. Also, note how big the training data is: 1MB. Very little data is required at all here.

We can change some things, but, we really can just run with the defaults. Go ahead and run:

python train.py

The train.py file has defaults in place for the tinyshakespeare dataset. While our model trains, let's poke around. In the train.py, we can see all of the flags. We specify wherever the input.txt file is that we wish to use. We specify where we want to save things, where to log to...hmm let's look!

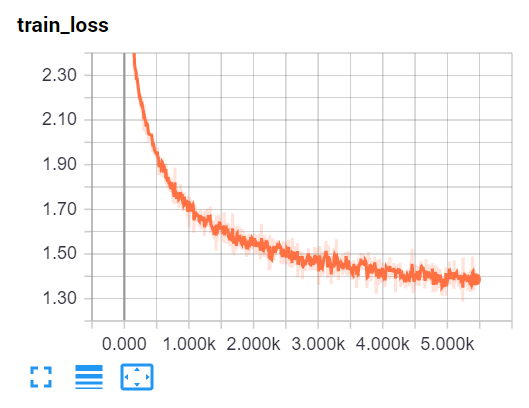

Open another command prompt locally (inside the project directory), and do: tensorboard --logdir=logs/, then navigate to where it says, and you should be able to see a loss graph, like:

Looks like it's already leveling out, but still dropping, we'll let it keep going.

Getting back to our options, we see we can modify the network's size and number of layers, along with batching, sequence lengths, epochs, saving, decay, and more.

I've actually let this thing fully train, but you can stop it whenever. A checkpoint file is saved every 1000 steps. To sample, just run the sample file:

python sample.py

The output probably looks something like:

b" iDfurm.\nDother shall you by sease, she sequencly of the general\nbroak his puspistirans sooner's land!\n\nHERMIONE:\nAre my wasteful sweeter than did with the added to her:\nmakes I part, thet else?\n\nKING RICHARD II:\nRobbling slaughter, in!\nThe touch my die own men's own jewing all.\n\nNurse:\nWere, friar, if this great Montague, sir, loving\nOrable degin suspection eye to do it;\nAnd if you may I ack no fower.\n\nFirst Citizen:\nEdward the starkly, for one exile abroad\nAnd your eye, that all the complewick"

This is encoded with UTF-8 encoding. For now, we don't really need this, so we could remove it to better see what we were expecting. Edit sample.py, and we can just remove the .encode('utf-8') part. Running again, you should see something like:

singzen flesters. GREEN: O good woman: I cannot bellow you. AUTOLYCUS: COMINIUS: Then, sir? while are my tongue to Rome, I do then! QUEEN ELIZABETH: If by my advance at birth'st make below That I do Leome in his new up of the hearth, And had been agreech those conquest will believe thee? FRIAR LAURENCE: The crown'd her, sir, mute the need in a bade; Thank upon your graces, and she fuit I'll grugs. LORD OF GAUNT: But I, these I would be same over to say. LADY GREY: 'Twas will not there ano

We could also just make the print statement as decoded:

data = model.sample(sess, chars, vocab, args.n, args.prime,

args.sample).encode('utf-8')

print(data.decode('utf-8'))

It really just depends what sort of data you're passing through here if you will want it to be utf-8 encoded. Anyway, neat, right?

Our model has actually learned words, some old-styled English, and the format/structure of the play with NAME and the colon, followed by a new line, some speech, 2 new lines, and repeat. Very impressive, and this did not take long at all, or need much data!

Peaking into our sample file, we also have some flags/defaults that we could change here! Let's try to change somethings, and instead run with:

python sample.py -n=200 --prime="SENTDEX:"

The n dictates how many characters we wish to generate and the prime is what we're going to start off the generative model with. The default is just a space, but we're going to start it with SENTDEX, so it should probably structure things like SENTDEX is a character in the play.

SENTDEX: Enfarce, I'll be untimely soul, I hear nor his name wept mole with me. LADY CAPULET: PETER: Sour fast issue of us, but was gauzes here? TRANIO: I do therein haply Signior Marcius! POMPEY: No toe!

Hah, alright. No toes for Pompey!

So, of course the next question you must have is....can we do this with... code? Exactly what I was thinking. Let's try to train a model to make python-like code. Bonus points if it runs, but I doubt it would.

-

Generative Model Basics (Character-Level) - Unconventional Neural Networks in Python and Tensorflow p.1

-

Generating Pythonic code with Character Generative Model - Unconventional Neural Networks in Python and Tensorflow p.2

-

Generating with MNIST - Unconventional Neural Networks in Python and Tensorflow p.3

-

Classification Generator Training Attempt - Unconventional Neural Networks in Python and Tensorflow p.4

-

Classification Generator Testing Attempt - Unconventional Neural Networks in Python and Tensorflow p.5

-

Drawing a Number by Request with Generative Model - Unconventional Neural Networks in Python and Tensorflow p.6

-

Deep Dream - Unconventional Neural Networks in Python and Tensorflow p.7

-

Deep Dream Frames - Unconventional Neural Networks in Python and Tensorflow p.8

-

Deep Dream Video - Unconventional Neural Networks in Python and Tensorflow p.9

-

Doing Math with Neural Networks - Unconventional Neural Networks in Python and Tensorflow p.10

-

Doing Math with Neural Networks testing addition results - Unconventional Neural Networks in Python and Tensorflow p.11

-

Complex Math - Unconventional Neural Networks in Python and Tensorflow p.12